Scammers are now cloning voices — and it fooled this mum into handing over $15k

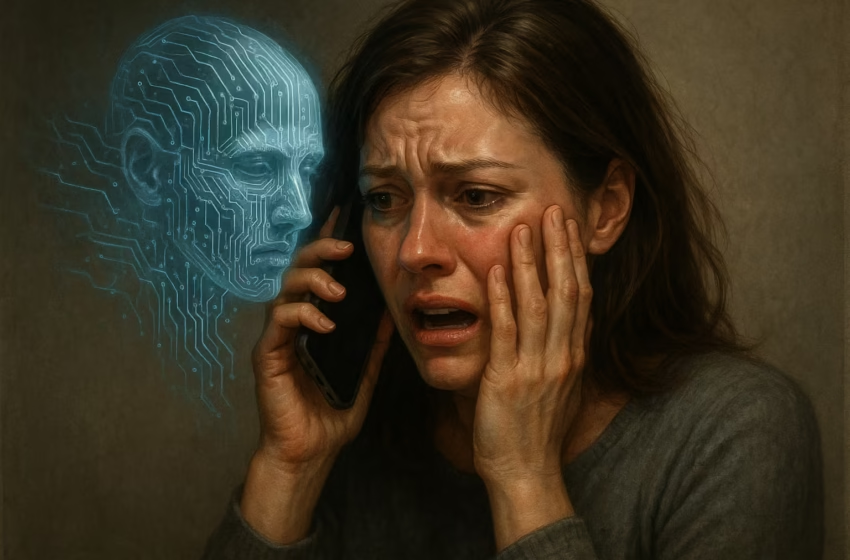

Distressed Mum picking an AI call

Last week, I read something that genuinely stopped me in my tracks. A woman in Florida got a phone call from a number that looked like her daughter’s. When she picked up, she heard her daughter sobbing, saying she’d been in a car crash and needed help.

It sounded exactly like her.

Terrified, she did what any parent would do — she tried to help. And in the panic of the moment, she ended up sending $15,000 to a complete stranger.

It turns out the whole thing was a scam. The voice she heard? It wasn’t her daughter. It was an AI-generated clone.

Honestly, it gave me chills.

This Isn’t Sci-Fi — It’s Happening Now

We’re not talking about some deepfake video shared online or a dodgy email from a “Nigerian prince”. This was a phone call. Real-time. Personal. Emotional.

And it worked because it hit where we’re most vulnerable: our families.

Think about it — if you got a call that sounded exactly like someone you love, in distress, crying, begging for help… would you stop to question it? Or would your heart take over?

That’s the terrifying part. AI tools have become so advanced, scammers can now clone someone’s voice with just a short audio clip. A few seconds from a TikTok, Instagram Story, or even a voicemail can be enough.

What Makes This So Dangerous?

Here’s what hit me the hardest: Sharon Brightwell, the mum who got scammed, knew something was off. But she still believed it was her daughter.

“There is nobody that could convince me that it wasn’t her,” she said.

That sentence has stuck with me.

It’s not that she was naive or careless. She was targeted with precision — the right number, the right voice, the right panic. And in that moment, logic didn’t stand a chance.

What Can We Do About It?

This is new territory for most of us. But there are a few things we can do to protect ourselves and our loved ones:

- Set up a family code word. Something simple but random that only close family knows. If someone calls in distress, ask for the code.

- Slow things down. Scammers rely on urgency. If someone demands money fast, pause. Hang up. Call the real person back on a known number.

- Stay informed. Talk about these scams with your family. Especially older relatives who might be less tech-savvy.

- Limit what you share online. Public videos with your voice can be used for cloning. It doesn’t mean you need to go silent — just be aware.

The Bigger Picture

I don’t think stories like this are going away any time soon. As AI keeps getting better (and easier to use), we’re going to see more of these deeply personal scams.

That doesn’t mean we need to panic or unplug completely. But it does mean we need to get a bit savvier.

For me, the takeaway is simple: fear makes us act fast. But now more than ever, we need to pause — even when it feels impossible.

Because not every familiar voice is who we think it is.